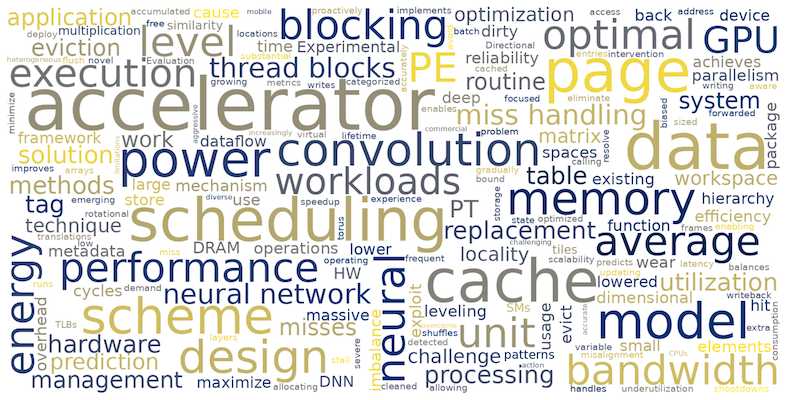

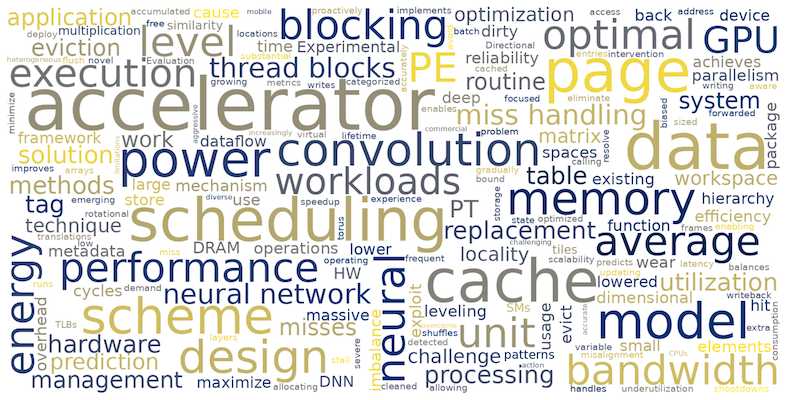

Research Keywords

The word cloud image below was generated from the abstract of our papers published since 2023. This can provide a quick snapshot of our lab’s research interests.

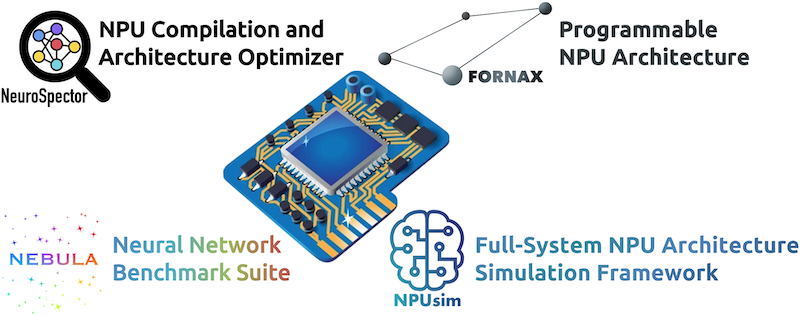

Neural Processing Units

Nearing the end of Moore’s Law, computing systems are geared towards specialization – executing a workload using multiple, heterogeneous processing units. With rapid advances in deep learning techniques, neural networks have become important workloads for computer systems. These trends sparked recent races to develop neural processing units (NPU), specialized processors for neural networks. As deep learning techniques mature, it becomes increasingly complicated to develop and analyze NPUs. We attempt to tackle this challenge in three approaches: i) creating a neural network benchmark suite, ii) developing a full-system NPU architecture simulation framework, and iii) designing memory-centric and reconfigurable NPUs. The neural network benchmark suite named Nebula implements full-fledged neural networks and lightweight versions of them, where the compact benchmarks enable the quick analysis of NPU hardware. NeuroSpector is an optimization framework that systematically identifies the optimal scheduling schemes of NPUs for diverse neural networks. NPUsim is a full-system NPU architecture simulation framework that enables cycle-accurate timing simulations with in-place functional executions of deep neural networks. It supports fully reconfigurable dataflows, accurate energy and cycle cost calculations, and cycle-accurate functional simulations along with sparsity, compression, and quantization schemes embedded in the NPU hardware. We envision that these frameworks will propel a number of related research toward developing memory-centric, reconfigurable NPUs.

Students: Chanho, Xingbo

Students: Chanho, Xingbo

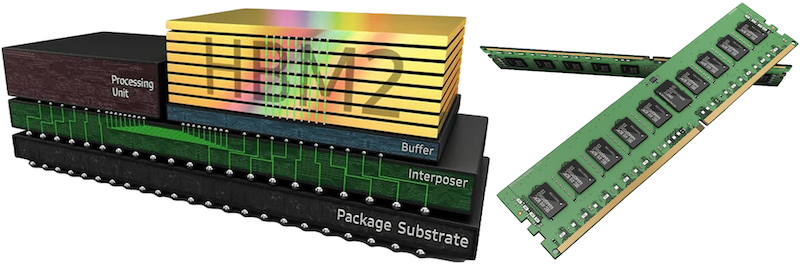

Scalable Memory Systems for High-Performance Computing

As emerging applications growingly demand larger memory bandwidth and capacity, memory has become the critical performance bottleneck of computing systems. The virtualization of heterogeneous processing units, including CPUs, GPUs, and accelerators, further complicates memory management. To resolve these problems, we need innovative solutions for memory systems, such as heterogeneous memory designs pairing up high-bandwidth on-package DRAM with large-capacity off-package memory, large-reach low-overhead memory virtualization schemes, and processing-in/near memory designs. These transformative changes in memory systems address a number of engineering challenges in computer architecture and operating systems. Our research focuses on developing architectural and OS-level support for the efficient memory management of high-performance computing systems.

Students: Youngin, Haneul, Dain

Students: Youngin, Haneul, Dain

GPU Microarchitecture for Deep Learning

Graphics processing units (GPUs) have been serving as de facto hardware to compute deep learning algorithms. Massively parallel executions in a single-instruction, multiple-thread (SIMT) fashion enable the GPUs to achieve substantially greater throughput than conventional CPUs. However, the GPUs still fall short of the desired throughput that deep learning workloads demand. Tensor cores introduced in recent NVIDIA GPUs attempt to augment the operational intensity of neural operations, which are specialized execution units dedicated to performing matrix-multiply-accumulate operations. By operating directly on matrices instead of scalar data elements, the tensor cores provide superior throughput to conventional CUDA cores. However, augmenting the operation intensity of GPUs by incorporating tensor cores makes DNN applications more memory-bounded. Our research focuses on enhancing the memory management efficiency of GPUs with tensor cores for deep learning applications.

Students: Joshua, Euijun, Mengjie

Students: Joshua, Euijun, Mengjie

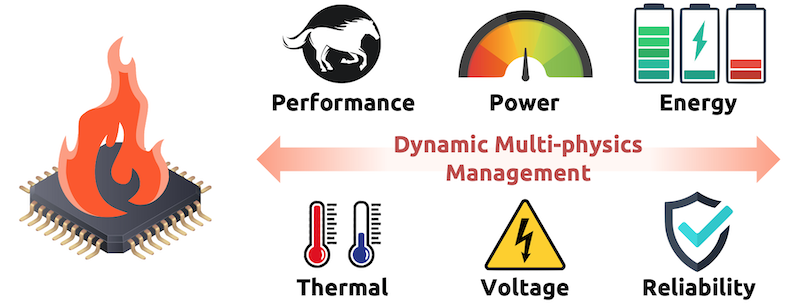

Machine Learning for Systems: Power, Thermal, and Reliability

Operations of contemporary computer systems are bounded by power, temperature, and reliability constraints. Such physical limitations have pushed the computer systems to enhance power efficiency (i.e., operations per watt), which requires innovative changes from devices and circuits to microarchitectures. Devising effective solutions for power, thermal, and reliability management requires a profound understanding of multi-physics phenomena. Microarchitectural activities in a processor consume power, which results in thermal dissipation. The temperature increase in turn raises leakage power, creating a positive feedback loop between the power and thermal dissipation. The temperature increase also has an adverse impact on reliability (e.g., aging and failures). These complex multi-physics interactions eventually constrain the operations of computer systems and their performance. Our research interests lie in modeling the multi-physics phenomena in heterogeneous computer systems, machine learning-based SoC power modeling and management solutions, etc.

Students: Kyunam, Taesoo, Sehyeon, Hyun-Jae

Students: Kyunam, Taesoo, Sehyeon, Hyun-Jae